- Pro

A forgotten AI debate from 38 years ago feels uncomfortably relevant today

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

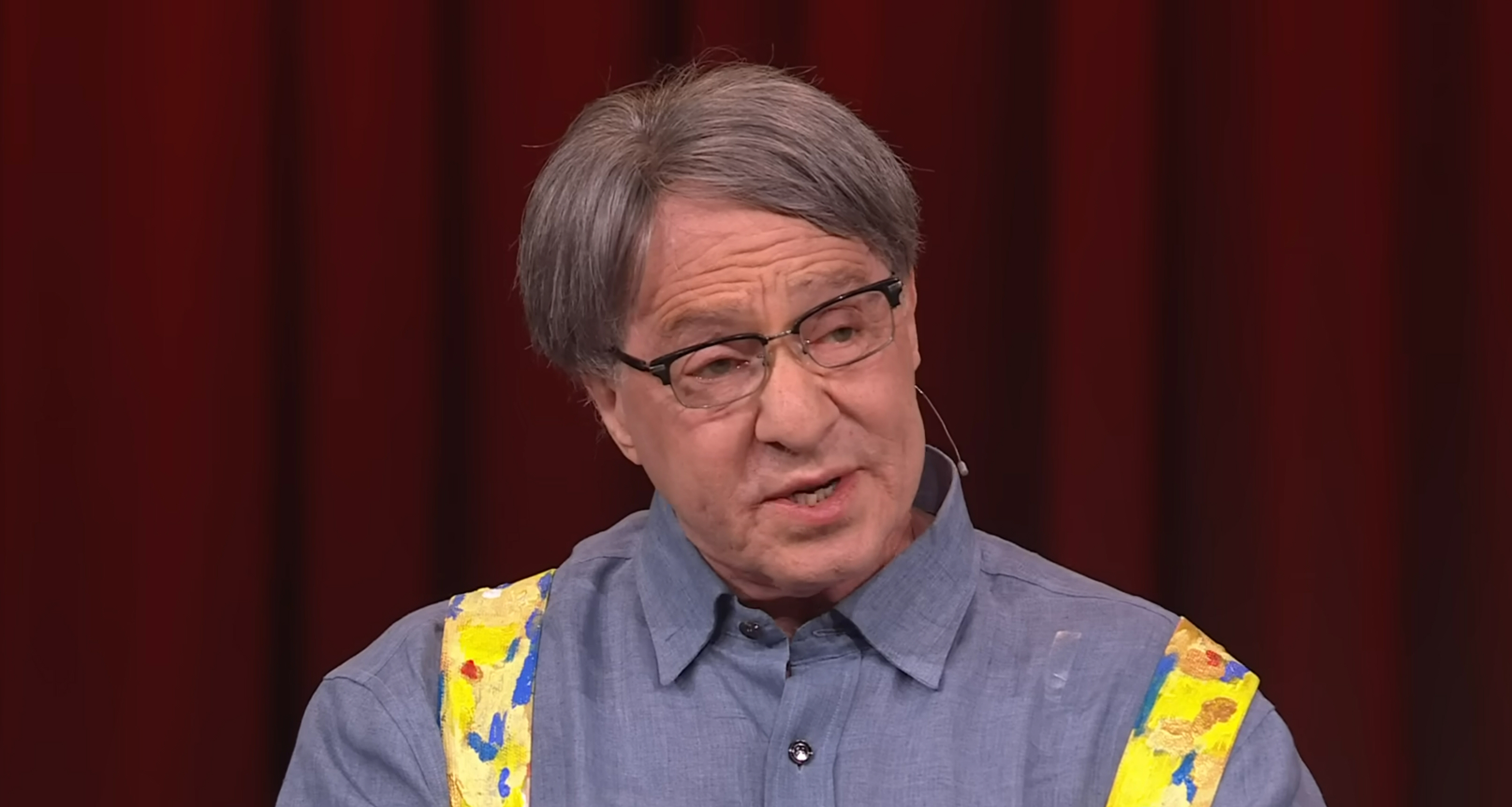

(Image credit: Oracle)

(Image credit: Oracle)

- Copy link

- X

- Threads

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Contact me with news and offers from other Future brands Receive email from us on behalf of our trusted partners or sponsors By submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.You are now subscribed

Your newsletter sign-up was successful

An account already exists for this email address, please log in. Subscribe to our newsletterIn 1987, long before artificial intelligence became the mass-market obsession it is today, Computerworld convened a roundtable to discuss what was then a new and unsettled question: how AI might intersect with database systems.

What makes the discussion notable in hindsight is not the optimism around AI, which was common at the time, but Ellison’s repeated insistence on limits.

You may like-

’What will people do in the year 2050, given the enormous intellectual power computers are likely to have?’: The man Google calls the spiritual father of AI asked big questions in 1991 — 35 years later, we’re still wrestling with the answers

’What will people do in the year 2050, given the enormous intellectual power computers are likely to have?’: The man Google calls the spiritual father of AI asked big questions in 1991 — 35 years later, we’re still wrestling with the answers

-

If the calculator wasn’t the end of learning, AI won’t be the end of work

If the calculator wasn’t the end of learning, AI won’t be the end of work

-

Microsoft exec says cynics about AI in Windows 11 are 'mind-blowing to me'

Microsoft exec says cynics about AI in Windows 11 are 'mind-blowing to me'

While others described AI as a new architectural layer or even a "new species" of software, Ellison argued that intelligence should be applied sparingly, embedded deeply, and never treated as a universal solution.

AI merely a tool

“Our primary interest at Oracle is applying expert system technology to the needs of our own customer base,” Ellison said. “We are a data base management system company, and our users are primary systems developers, programmers, systems analysts, and MIS directors.”

That framing set the tone for everything that followed. Ellison was not interested in AI as an end-user novelty or as a standalone category. He saw it as an internal tool, one that should improve how systems are built rather than redefine what systems are.

Many vendors treated expert systems as a way to replicate human decision making wholesale. Kehler described systems that encoded experience and judgment to handle complex tasks such as underwriting or custom order processing.

Are you a pro? Subscribe to our newsletterContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.Landry went further, arguing that AI could form the architecture for an entirely new generation of applications, built as collections of cooperating expert systems.

Ellison pushed back at this notion, prompting moderator Esther Dyson to ask: "Your vision of AI doesn’t seem to be quite the same as Tom Kehler’s, even though you have this supposed complementary relationship. He differentiates between the AI application and the data base application, whereas you see AI merely as a tool for building data bases and applications."

“Many expert systems are used to automate decision making,” Ellison replied. “But a systems analyst is an expert, too. If you partially automate his function, that’s another form of expert system.”

You may like-

’What will people do in the year 2050, given the enormous intellectual power computers are likely to have?’: The man Google calls the spiritual father of AI asked big questions in 1991 — 35 years later, we’re still wrestling with the answers

’What will people do in the year 2050, given the enormous intellectual power computers are likely to have?’: The man Google calls the spiritual father of AI asked big questions in 1991 — 35 years later, we’re still wrestling with the answers

-

If the calculator wasn’t the end of learning, AI won’t be the end of work

If the calculator wasn’t the end of learning, AI won’t be the end of work

-

Microsoft exec says cynics about AI in Windows 11 are 'mind-blowing to me'

Microsoft exec says cynics about AI in Windows 11 are 'mind-blowing to me'

Ellison drew a clear line between processes that genuinely require judgment and those that don't. In doing so, he rejected what might now be called AI maximalism.

“In fact, not all application users are experts or even specialists,” he said. “For example, an order processing application may have dozens of clerks who process simple orders. Instead of the order processing example, think about checking account processing. Now, there are no Christmas specials on that. There are no special prices. Instead, performance is all-critical, and recovery is all-critical.”

Business God | Software Billionaire | YouTube Documentary - YouTube Watch On

Watch On

"The height of nonsense"

When Dyson suggested a rule such as automatically transferring funds if an account balance dropped below a threshold, Ellison was blunt.

“That can be performed algorithmically because it’s unchanging,” he said. “The application won’t change, and to build it as an expert system, I think, is the height of nonsense.”

This was a striking statement in 1987, when expert systems were widely promoted as the future of enterprise software. Ellison went further, issuing a warning that sounds surprisingly modern.

“And so I say that a whole generation is going to be built on nothing but expert systems technology is a misuse of expert systems. I think expert systems should be selectively employed. It is human expertise done artificially by computers, and everything we do requires expertise.”

Rather than applying AI everywhere, Ellison wanted to focus it where it changed the economics or usability of system development itself. That led him to what he called fifth-generation tools, not as programming languages, but as higher-level systems that eliminated procedural complexity.

“We see enormous benefits in providing fifth-generation tools,” he said. “I don’t want to use the word ‘languages,’ because they really aren’t programming languages anymore. They are more.”

He described an interactive, declarative approach to building applications, one where intent replaced instruction.

“I can sit down next to you, and you can tell me what your requirements are, and rather than me documenting your requirements, I’ll sit and build a system while we’re talking together, and you can look over my shoulder and say, ‘No, that’s not what I meant,’ and change things.”

The promise was not just speed, but a change in who controlled software.

“So not only is it a productivity change, a quantitative change, it’s also a qualitative change in the way you approach the problem.”

Larry Ellison on the Race for AI - YouTube Watch On

Watch On

Not anti-AI

That philosophy carried through Oracle’s later product strategy, from early CASE tools to its eventual embrace of web-based architectures. A decade later, Ellison would argue just as forcefully that application logic belonged on servers, not on PCs.

“We’re so convinced that having the application and data on the server is better, even if you’ve got a PC,” he told Computerworld in 1997. “We believe there will be almost no demand for client/server as soon as this comes out.”

By 2000, he was even more forthright.

“People are taking their apps off PCs and putting them on servers,” ZDNET reported Ellison as saying. “The only things left on PCs are Office and games.”

In retrospect, Ellison’s predictions were often early and sometimes overstated. Thin clients did not replace PCs, and expert systems did not transform enterprise software overnight. Yet the direction he described proved durable.

Application logic moved to servers, browsers became the dominant interface, and declarative tooling became a core design goal across the industry.

What the 1987 roundtable captures is the philosophical foundation of that shift. While others debated how much intelligence to add to applications, Ellison questioned where intelligence belonged at all.

He treated AI not as a destination, but as an implementation detail, valuable only when it reduced complexity or improved leverage.

As AI once again dominates enterprise strategy discussions, the caution embedded in Ellison’s early comments feels newly relevant.

His core argument was not anti-AI, but anti-abstraction for its own sake. Intelligence mattered, but only when it served a larger architectural goal.

In 1987, that goal was making databases the center of application development. Decades later, the same instinct underpins modern cloud platforms. The technology has changed, but the tension Ellison identified remains unresolved: how much intelligence systems need, and how much complexity users are willing to tolerate to get it.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

TOPICS AI Wayne WilliamsSocial Links NavigationEditor

Wayne WilliamsSocial Links NavigationEditorWayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.

View MoreYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more ’What will people do in the year 2050, given the enormous intellectual power computers are likely to have?’: The man Google calls the spiritual father of AI asked big questions in 1991 — 35 years later, we’re still wrestling with the answers

’What will people do in the year 2050, given the enormous intellectual power computers are likely to have?’: The man Google calls the spiritual father of AI asked big questions in 1991 — 35 years later, we’re still wrestling with the answers

If the calculator wasn’t the end of learning, AI won’t be the end of work

If the calculator wasn’t the end of learning, AI won’t be the end of work

Microsoft exec says cynics about AI in Windows 11 are 'mind-blowing to me'

Microsoft exec says cynics about AI in Windows 11 are 'mind-blowing to me'

CEOs are warning AI adoption and spending should be more strategic

CEOs are warning AI adoption and spending should be more strategic

AI is no SKU—and what that means for the enterprise

AI is no SKU—and what that means for the enterprise

Everyone is wrong about AI regulation, and the history of the Internet proves it

Latest in Pro

Everyone is wrong about AI regulation, and the history of the Internet proves it

Latest in Pro

Conduent data breach might have been much worse than initially expected

Conduent data breach might have been much worse than initially expected

A fresh wave of Zendesk spam emails is hitting users across the world

A fresh wave of Zendesk spam emails is hitting users across the world

How rising complexity is reshaping the role of data center services

How rising complexity is reshaping the role of data center services

OpenAI unveils GPT-5.3-Codex, which can tackle more advanced and complex coding tasks

OpenAI unveils GPT-5.3-Codex, which can tackle more advanced and complex coding tasks

The silent DNS malware that’s redefining email and web-based cyberattacks

The silent DNS malware that’s redefining email and web-based cyberattacks

Anthropic says its new Opus 4.6 platform found over 500 previously unknown high-severity security flaws in open-source libraries during testing

Latest in Features

Anthropic says its new Opus 4.6 platform found over 500 previously unknown high-severity security flaws in open-source libraries during testing

Latest in Features

"The height of nonsense": Oracle co-founder Larry Ellison’s 1987 argument that not everything should be AI makes perfect sense in 2026

"The height of nonsense": Oracle co-founder Larry Ellison’s 1987 argument that not everything should be AI makes perfect sense in 2026

Seeing BAFTA-winning game music live was an experience I think any gaming fan would love

Seeing BAFTA-winning game music live was an experience I think any gaming fan would love

Here are my 4 top TVs that should be getting a big discount soon

Here are my 4 top TVs that should be getting a big discount soon

'With RPGs, it's all about offering players things that they only get to experience in the game' — Sea of Remnants creative director outlines vision behind upcoming free-to-play pirate epic

'With RPGs, it's all about offering players things that they only get to experience in the game' — Sea of Remnants creative director outlines vision behind upcoming free-to-play pirate epic

I ditched Windows 11 for Windows XP for a week – here’s what happened

I ditched Windows 11 for Windows XP for a week – here’s what happened

MagSafe and more: how likely are these iPhone 17e rumors?

LATEST ARTICLES

MagSafe and more: how likely are these iPhone 17e rumors?

LATEST ARTICLES- 1Heated Rivalry season 2: everything we know so far about the hit HBO Max show’s return

- 2Need a last-minute Super Bowl VPN? Proton VPN is at one of its lowest prices ever

- 3What if we treated the Nvidia GB10 as an employee: AI could remove reporting roles entirely from businesses with thousands of job losses, here's how this reviewer did it

- 4I’m addicted to Nintendo Switch Online, but I wish I’d known about these hidden gems sooner

- 5I test smart lights for a living, and these are my top 3 Philips Hue smart lights to brighten up any space